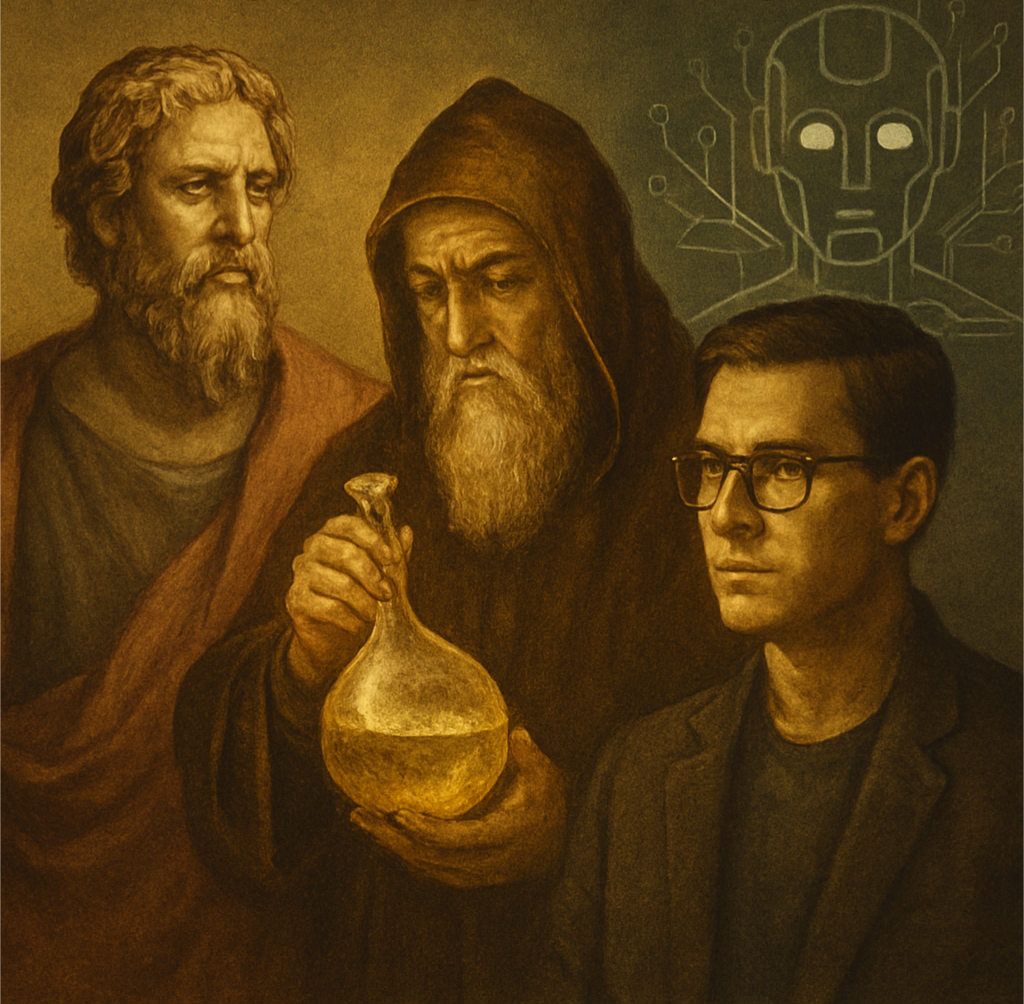

From Sophists to Alchemists to AI Coders: The Technique Without Understanding

We believe we are at the cutting edge of history, but we may just be repeating its oldest mistakes. From the Sophists’ empty rhetoric to the Alchemists’ blind experimentation, humanity has a long history of mastering technique without possessing understanding. This dialogue explores how today’s AI-driven world is creating a new class of "technician-priests" who command powerful tools they cannot truly explain—and why this gap between output and insight is a looming crisis for modern computation.

Dr. Marcus Doubtful, former quantum physicist turned philosopher of science.

Dr. Andrew Sofist, a computational physicist and AI researcher who has just delivered a triumphant presentation on large language models' reasoning capabilities.

Andrew: Marcus, I have to say your critique of AI during the Q&A was... unnecessarily philosophical. Look at the results we're getting - LLM models solving complex mathematical problems, making scientific discoveries, even writing coherent philosophy papers. The proof is in the pudding. We don't need to understand every detail of how neural networks work to use them effectively.

Marcus: But Andrew, do you hear yourself? "We don't need to understand... to use them effectively." That's essentially saying "shut up and calculate" - the same attitude that's infected physics for decades. Quantum mechanics works, so stop asking what it means. AI works, so stop asking how it thinks.

Andrew: Exactly! And it's been incredibly successful. The 20th century gave us nuclear power, computers, GPS, the internet - all because we focused on what works rather than getting bogged down in metaphysical speculation. Science describes the external world as it actually is. Our tools are getting more precise, more powerful. AI is just the next step in that trajectory.

Marcus: But Andrew, you've just articulated a complete philosophical position while claiming to avoid philosophy. You're assuming that science "describes the external world as it actually is." That's a massive metaphysical assumption - that there's an objective external world independent of observers, that our mathematical tools can capture its essence, that truth equals predictive accuracy.

Andrew: It's not an assumption, it's empirically verified! Our theories make predictions that come true. We can land rovers on Mars, cure diseases, build technologies that transform civilization. If that's not capturing reality, what is?

Marcus: I'm not disputing the practical successes - they're remarkable. But notice how you keep conflating effectiveness with truth. Your faith that "rationality is fundamental" - that mathematical and logical tools will ultimately lead us to truth - is itself a conviction, not a conclusion.

Andrew: Faith? Conviction? Marcus, mathematics and logic are the most rigorous tools we have. They're universal, objective, reliable.

Marcus: Are they? Let me ask you something - have you heard of Gödel's incompleteness theorems?

Andrew: Something about mathematical systems... but what's that got to do with practical applications?

Marcus: Everything. Gödel showed that any mathematical system complex enough to include arithmetic cannot prove its own consistency. Mathematics cannot ground itself mathematically. Then there's Russell's paradox, the halting problem in computation, the liar paradox in logic. Our most rigorous tools are riddled with self-referential limits.

Andrew: Those are abstract philosophical problems. They don't affect real-world applications.

Marcus: Don't they? When AI systems hallucinate - generate convincing but false information - isn't that exactly the same pattern? The system produces outputs that seem coherent but may have no relationship to truth. It's optimizing for persuasiveness.

Andrew: Hallucination is just a technical problem we're solving. Better training data, improved architectures, more robust testing...

Marcus: But you're missing the deeper issue. Your approach to AI is remarkably similar to what the ancient Greeks called sophistry. Have you heard of the Sophists?

Andrew: No, should I have?

Marcus: This is what I mean about narrow education. The Sophists were the first professional teachers in ancient Greece, around the 5th century BCE. Their motto was "Man is the measure of all things." They could argue any position convincingly - make the weaker argument appear stronger through rhetorical skill.

Andrew: Sounds like good debaters. So?

Marcus: So they abandoned the search for truth in favor of persuasive effectiveness. They discovered that if you master the right techniques, you can make people believe anything. Sound familiar? Modern AI optimizes for outputs that seem convincing to humans, regardless of whether they correspond to reality.

Andrew: But AI systems are trained on vast amounts of real data...

Marcus: So were the Sophists! They studied human psychology, social dynamics, linguistic patterns. They became incredibly sophisticated at producing the desired effects. But Plato and Aristotle recognized the danger - when technique becomes disconnected from the pursuit of truth, you get a kind of civilizational crisis.

Andrew: You're being dramatic, Marcus. We're not in crisis - we're making unprecedented progress.

Marcus: Are we? Or are we making the same mistake medieval alchemists made?

Andrew: I don't know what you're talking about. Can we stick to science please?

Marcus: Alchemists were the AI researchers of their time, Andrew. They performed elaborate experiments, followed complex procedures, occasionally achieved spectacular results. They could turn base metals into something that looked like gold, at least temporarily. They had impressive equipment, secretive methods, devoted followers.

Andrew: But that is pseudoscience...

Marcus: Exactly! But from inside the alchemical worldview, it looked like cutting-edge natural philosophy. They were empirically tinkering, getting results they couldn't explain, optimizing their techniques without understanding the underlying principles. Does that sound familiar?

Andrew: AI is based on rigorous mathematics, statistical learning theory, massive computational resources...

Marcus: And alchemy was based on careful observation, systematic experimentation, sophisticated apparatus. The difference isn't the sophistication of the methods - it's understanding. Alchemists confused operational success with genuine understanding. They could sometimes produce impressive effects without comprehending what they were actually doing.

Andrew: You're saying modern AI is just sophisticated alchemy?

Marcus: I'm saying both represent a retreat from understanding into technique mastery. The alchemist's gold might fool people temporarily, but it wasn't real gold. AI's outputs might be convincing, but are they real understanding?

Andrew: Even if you're right about some limitations... what's the alternative? Are you suggesting we abandon scientific methods? Go back to pure philosophy?

Marcus: No, that would be throwing out the baby with the bathwater. I'm suggesting we need a more comprehensive worldview - one that includes mathematics, logic, programming, the scientific method, and AI, but doesn't take any of them as ultimate or self-grounding.

Andrew: What would that look like?

Marcus: Think about it this way - you're using consciousness to study consciousness, intelligence to study intelligence, mathematical reasoning to ground mathematical reasoning. Every time we try to step completely outside our own perspective to study reality "objectively," we run into self-referential problems.

Andrew: So you're saying objectivity is impossible?

Marcus: I'm saying the sharp distinction between subjective observer and objective reality might be a conceptual artifact. What if world, knower and known, are more intimately connected than our current paradigm assumes?

Andrew: That sounds... mystical.

Marcus: Does it? Or does it sound like what quantum mechanics has been suggesting for a century? That the observer and observed can't be cleanly separated? That measurement plays a constitutive role in determining what properties manifest?

Andrew: I... hadn't thought about it that way.

Marcus: Here's what I think is happening, Andrew. We've created incredibly sophisticated tools for pattern recognition and optimization, but we've lost the capacity to step back and ask: What patterns are worth recognizing? What should we optimize for? Those aren't technical questions - they require wisdom, judgment, an understanding of meaning and value.

Andrew: But how do we verify wisdom? How do we know what's worth optimizing for?

Marcus: That's the right question! And it can't be answered purely through calculation or data analysis. It requires what the ancients called phronesis - practical wisdom that emerges through lived experience, contemplative reflection, engagement with questions of meaning and purpose.

Andrew: But that's subjective...

Marcus: Only if you maintain the rigid subject/object distinction. What if some forms of knowledge are inherently participatory? You can't understand love by studying neurotransmitters, or comprehend mortality by analyzing biological processes, or grasp the meaning of existence through computational modeling.

Andrew: So you're saying there are different types of knowledge? Different ways of approaching truth?

Marcus: Yes! And that's not anti-scientific - it's meta-scientific. A truly rigorous approach would recognize the appropriate domains for different methods. Use mathematical modeling for engineering problems, but not for comprehending the concepts of life and intelligence. Use algorithmic optimization for logistics, but not for determining human values.

Andrew: But how do we avoid relativism? If there are multiple ways of knowing, who decides which one to use when?

Marcus: That's where wisdom comes in - the cultivated capacity to recognize what kind of approach a situation requires. It's not relativism because some approaches really are better suited for certain domains. You wouldn't use poetry to design a bridge or use engineering calculations to comfort a grieving friend.

Andrew: Go on.

Marcus: Maybe that's the wrong question. Maybe instead of trying to create artificial understanding, we should be asking: What can we say about understanding? What can we say about life and intelligence? And maybe those questions can't be answered from within the current paradigm because they're not technical problems but questions about the nature of being itself.

Andrew: So where does that leave us? Where do we go from here?

Marcus: We develop what I call a meta-paradigm - a framework that can comprehend mathematics, logic, science, and AI without being reducible to any of them. One that shows where and why each approach reaches its limits, and provides guidance for when to use which tools. We call it Geneosophy.

Andrew: And you think this meta-paradigm can dissolve the paradoxes and mysteries you mentioned?

Marcus: I think it can show that many apparent paradoxes arise from applying methods outside their appropriate domains. The "hard problem of consciousness," the "mystery of life," the "nature of intelligence" - these might not be unsolvable problems but category errors. Questions that require new conceptual tools rather than traditional mathematical, logical and programming tools.

Andrew: It's a lot to consider. I spent my whole career assuming that more computational power and better algorithms would eventually solve everything...

Marcus: And they might solve everything that can be solved computationally. But life, consciousness, meaning, intelligence - these might belong to a different category entirely.

Andrew: You know what's ironic? I came into this conversation thinking you were anti-science. But you're actually asking for more rigorous thinking about science - thinking about its foundations, its limits, its proper applications.

Marcus: Exactly. True scientific thinking includes recognizing the boundaries of scientific applicability. The most rigorous approach is one that doesn't overextend its methods beyond their domain of validity.

Andrew: So we're not abandoning reason or evidence or mathematical rigor...

Marcus: We're liberating them from the burden of having to answer every question. Some questions require different forms of engagement. And that's not a weakness of reason but a recognition of its proper scope.

Andrew: You know, Marcus, I think I need to read some philosophy. Real philosophy, not just technical papers. Where would you suggest I start?

Marcus: Not just philosophy. Philosophy has its own limitations. Start with the questions that your computational systems can't answer: What does it mean to understand something? What is the relationship between mind and reality? What are the recognized limit of mathematics, logic and programming. Why do some biologist question the reductionist approach of the scientific method? There are many questions out there. Let those questions work on you, rather than trying to solve them immediately.

Andrew: Thanks!

Marcus: And maybe... maybe those questions will work on our technologies too. Maybe they'll help us build systems that serve human flourishing rather than just optimizing arbitrary metrics.